Finding a target: a case study in Xamarin development

I've been developing with Xamarin for about a month in an effort to extend Tenterhook to mobile devices. In a previous post I covered some of the architectural concepts that I thought might apply. As it turns out, almost every tenet of software development that's been evangelized for ages — separation of concerns, DRY principle, et al. — has been essential so far.

The real-world challenge of finding a target

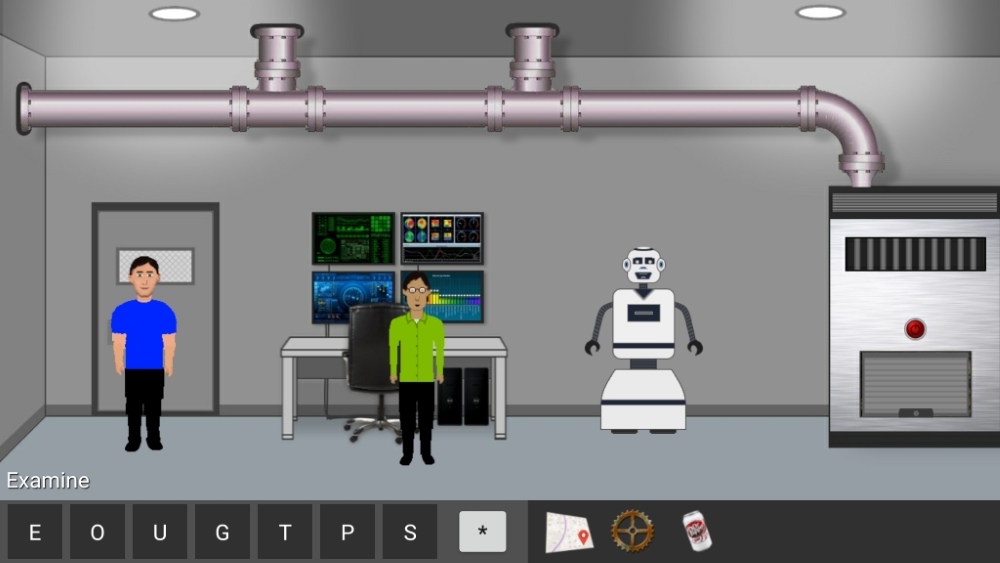

Below is a screenshot from my Android phone, a scene from a "sandbox" adventure I'm using for development (kindly excuse the ugly controls at the bottom, they're placeholders at the moment):

If you're familiar with old-school point-and-click adventure games you know the drill. You pick a verb/command (e.g. "examine") and a target (e.g. "desk") to make things happen. This is the heart and soul of any adventure, and on the surface it seems like an easy mechanism to implement.

... it is, right? Ehhhhh...

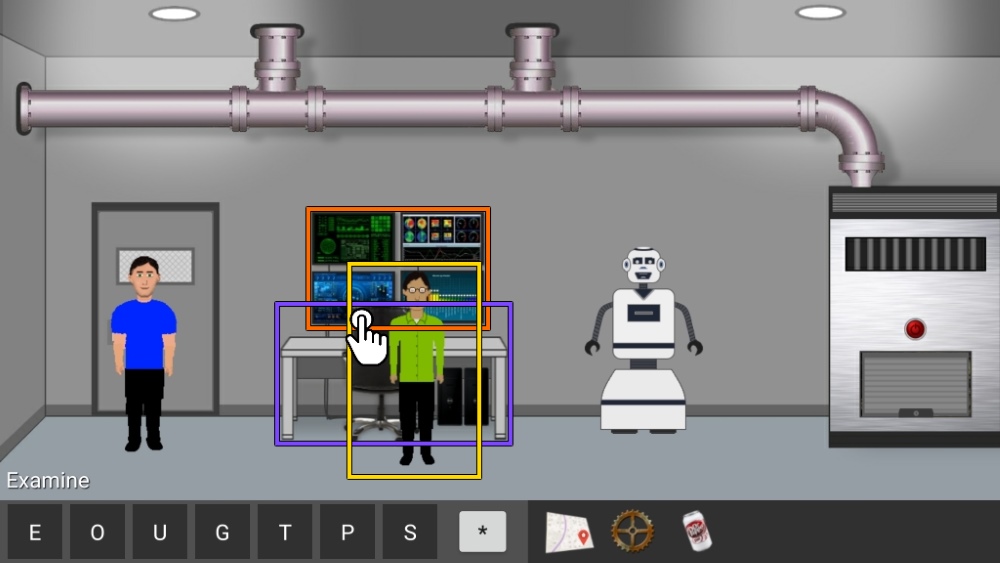

Consider the image below, where the user taps a certain spot (represented by the hand icon) in an attempt to pick a target:

Suddenly it gets a bit more complicated. You'd probably expect the target to be the desk/chair. But if we rely on Android's hit-testing logic, the actual target will be the character in the green shirt.

The character sits in an Android ImageView, which occupies more space than just the lit pixels. In fact, its bounding box (the yellow box above) is considerably larger and includes transparent pixels. The desk and chair (purple box) behind it has transparent areas too. Same goes for the monitors (orange box). Android doesn't care whether you tapped an opaque or transparent pixel; you tapped the bounding box of the ImageView in front of everything else, so that's where the story ends.

And so, the story begins

So what do we do? Let's start with what we can easily figure out:

- The coordinates of the touch.

- The dimensions and bounding boxes of all interactive targets (e.g. desk, monitor, character).

- Which targets have a bounding box that includes the coordinates (making them eligible).

- The depth (z-index) of eligible targets (foreground wins over background).

These details apply whether the context is HTML, Android, or iOS, so they're candidates for "common" code. But we're not done. Even if we tap the topmost target, we expect it to be ignored if the area we tapped is transparent. But what if Android and iOS have dramatically different approaches to detecting transparent pixels? We need to separate "core" concerns from platform-specific ones and that's where thoughtful planning comes in.

Send in the renderers!

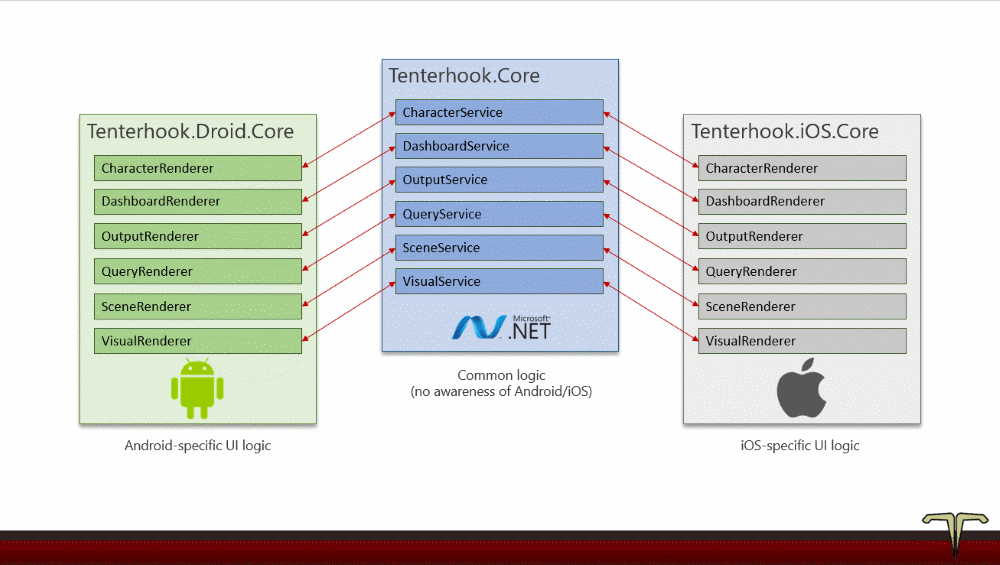

The graphic below illustrates an approach I've taken with Tenterhook:

The Tenterhook.Core assembly hosts a set of services which handle the core/common logic. It also defines a set of "renderer" interfaces, and Tenterhook.Droid.Core and Tenterhook.iOS.Core are responsible for providing their respective implementations.

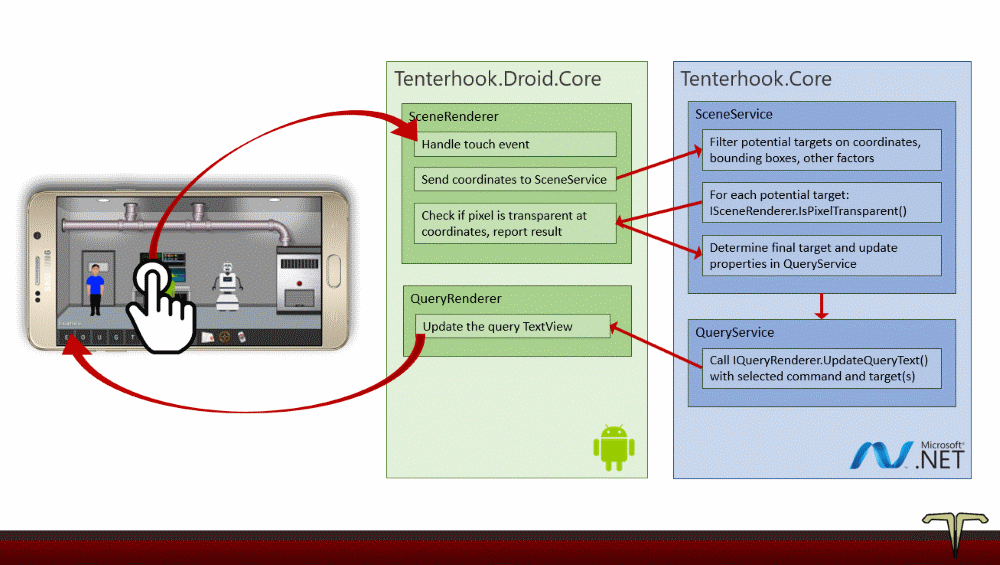

Given this approach, here's an illustration of what happens when a user taps the screen:

In this case, two services and associated renderers work together to identify the target and show updated query text.

The overall philosophy: Tenterhook.Droid.Core stays lean and only concerns itself with Android-specific stuff. Tenterhook.Core handles the heavy lifting and trusts the renderers to do the right UI things. The boundaries are clear, so adding Tenterhook.iOS.Core in the future should be a straightforward endeavor.

Conclusion

It's been exciting to learn so much and witness so much progress. If you're thinking about taking the plunge with mobile development, consider the powerful combination of Xamarin, long-standing software development principles, and a healthy dose of thoughtful planning.